Database migration is a complex process that demands careful assessment to ensure data integrity, application performance, and overall system reliability. The OpenAI API, with its advanced natural language processing capabilities, offers a way to simplify this process by automating assessments and summarizing key points. This guide will walk you through using the AWS Schema Conversion Tool (AWS SCT) for initial assessments, integrating the OpenAI API with Python to generate assessment summaries, and understanding the requirements for connecting with Azure OpenAI API, as well as its differences from ChatGPT OpenAI.

Kickstarting Your Migration: Utilizing AWS SCT for Comprehensive Database Assessment

The Amazon Web Services Schema Conversion Tool (AWS SCT) simplifies database migration from one platform to another. It assesses your existing database schema and generates a detailed report on potential migration issues. Supporting a wide range of source and target databases, AWS SCT is versatile for many migration scenarios.

AWS SCT examines your database schema, identifies non-convertible elements, and produces a comprehensive report. This report, containing potential action items, is crucial for planning your migration, offering an overview of the complexity, potential challenges, and the effort required.

The report, in PDF format, provides a detailed view of your database schema, potential issues, and recommendations. While invaluable for database administrators and engineers, the report’s extensive and complex nature makes OpenAI API a perfect tool for simplification and summarization.

Transforming PDFs into Comprehensive Assessment Summaries

With the AWS SCT report in hand, the next step is to utilize OpenAI API’s sophisticated natural language processing capabilities. By reading and understanding the PDF report, OpenAI can extract key points and summarize the information in a more accessible format.

Using the Python package pymupdf, we scan the PDF and convert its contents to text. This text is then fed to OpenAI API to highlight important sections and summarize the findings, including potential issues and recommended actions.

The Python method process_directory reads each PDF, converts it to text, and then passes this text to another method, generate_summary, which calls the OpenAI API to generate a concise assessment summary.

Method: process_directory()

def process_directory(directory):

"""Processes each PDF file in the given directory to generate a summary."""

hostname, port_number, database_name = directory.split('_')

for file in os.listdir(directory):

if file.endswith('.pdf'):

file_path = os.path.join(directory, file)

pdf_text = extract_text_from_pdf(file_path)

summary = generate_summary(pdf_text)

print(f"Summary for {file} ({hostname}, {port_number}, {database_name}):\n{summary}\n")

Method: generate_summary()

def generate_summary(text):

"""Generates a summary for the given text using OpenAI's API."""

response = openai.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are database \

reliability engineer providing migration \

assessment summary."},

{"role": "user", "content": "Summarise the output \

of assessment text: \n" + text}

],

temperature=0.4,

max_tokens=150,

top_p=1.0,

frequency_penalty=0.0,

presence_penalty=0.0

)

summary = response.choices[0].message.content.strip()

return summary

Understanding OpenAI API Parameters

Understanding the role and impact of various OpenAI API parameters is crucial for tailoring your query results. Here’s a brief overview:

temperature (0.4): This parameter controls the level of creativity or randomness in the responses generated by the model. A lower temperature, such as 0.4, results in more predictable and conservative outputs. Conversely, a higher temperature encourages diversity and creativity in the answers.

max_tokens (150): Specifies the maximum length of the generated response measured in tokens (words and characters). Setting this to 150 means the response will not exceed 150 tokens, ensuring concise and to-the-point answers.

top_p (1.0): Also known as “nucleus sampling,” this parameter filters the model’s token generation process. A value of 1.0 means no filtering is applied, allowing any token to be considered. Lowering this value helps in focusing the response generation on more likely token sequences, potentially enhancing relevance and coherence.

frequency_penalty (0.0): Adjusts the likelihood of the model repeating the same line of text. A value of 0.0 implies no penalty on repetition, enabling the model to freely reuse tokens. Increasing this value discourages repetition, fostering more varied and dynamic outputs.

Above python methods generated modest summary from my sandbox environment. Modest at this point – we can take this much further though. I’ve taken small part of whole summary describing migration effort from MS SQL Server 2019 database to RDS for PostgreSQL.

Migration Plan Summary

Source Database

- AdventureWorks2019.MSSQL

- Microsoft SQL Server 2019 (RTM-CU22-GDR) – 15.0.4326.1 (X64)

- Standard Edition (64-bit) on Windows Server 2019 Datacenter

- Case sensitivity: OFF

Target Platform:

Assessment Findings:

- Storage Objects: 100% can be converted automatically or with minimal changes.

- Code Objects: 77% can be converted automatically or with minimal changes.

- Estimated 99.9% of code can be converted to AWS RDS for PostgreSQL automatically.

- 515 conversion actions recommended ranging from simple to complex tasks

OPENAI

Above AI-generated summary can be a significant time saver for database administrators and engineers. Instead of going through pages of detailed reports, they can quickly glance through the summary and understand the key points. It can also be used as a reference guide during the migration process, helping to avoid potential issues and ensuring a smooth transition.

Building a fully automated OpenAI-Powered Python Module for PDF Analysis and Summary Generation

To generate the assessment summary using the OpenAI API, I developed the Python methods described above. These methods are components of a larger assessment framework that I’m currently developing. In this article, we focus exclusively on the integration with the OpenAI API. It’s worth noting that the PDF files used as input are generated through a fully automated process. However, the details of that process are beyond the scope of this blog post.

Python, with its versatility and powerful capabilities, is ideal for integrating with the OpenAI API. It offers libraries for API interactions and processing PDF files, enabling the automation of the entire workflow—from reading PDF files to generating summaries.

For the initial step, libraries such as PyPDF2, PDFMiner, or pymupdf—which I prefer—can be utilized to read the contents of PDF files. After extracting the text, this information can be processed by the OpenAI API. The API is designed to analyze the text, pinpoint the essential information, and compile a concise summary.

Subsequently, this summary can be saved either as a text file or within a database for easy access in the future. Moreover, the module can be configured to insert summaries into a database table, integrating them into a larger assessment data repository. This data can then be leveraged for generating reports, such as Power BI dashboards or other forms of reporting, allowing key stakeholders to stay informed about the migration process’s progress.

Setting Up Azure OpenAI API: Essentials and Differences from ChatGPT

The Azure OpenAI API is a cloud-based service enabling developers to integrate OpenAI’s capabilities into their applications. To utilize the Azure OpenAI API, one must have an Azure account and subscribe to the OpenAI service, in addition to generating an API key for authentication during API requests.

There are notable differences between utilizing ChatGPT and the Azure OpenAI API.

For ChatGPT, your Python module only requires the openai.api_key to be set, along with specifying the model, such as “gpt-4” in my example code. However, integrating with the Azure OpenAI API necessitates additional configuration:

openai.api_base = os.getenv('AZURE_OPENAI_ENDPOINT')

openai.api_key = os.getenv('AZURE_OPENAI_API_KEY')

openai.api_version = os.getenv('AZURE_OPENAI_VERSION')

openai.api_type = "azure"

deployment_id = os.getenv('AZURE_OPENAI_DEPLOYMENTID')

It’s important to note that when using Azure OpenAI, Python OpenAI API parameter model corresponds to your specific deployment name instead of “gpt-4” as it was for ChatGPT model in my examples earlier.

The Azure OpenAI API and ChatGPT OpenAI both offer advanced natural language processing capabilities, albeit tailored to different use cases. The Azure OpenAI API is specifically designed for embedding AI functionalities into applications, whereas ChatGPT OpenAI excels in conversational AI, facilitating human-like text interactions within applications.

Choosing between the two for summarizing database migration assessments hinges on your project’s unique needs. Azure OpenAI API is the preferable option for projects requiring deep AI integration. On the other hand, if your application benefits from conversational AI features, ChatGPT OpenAI is the way to go.

In summary, utilizing the OpenAI API can drastically streamline the database migration assessment process. The AWS Schema Conversion Tool yields a thorough report on your database schema and potential issues, which can efficiently be condensed using the OpenAI API. By developing a Python module, this summarization process becomes automated, thus conserving both time and resources. Regardless of whether Azure OpenAI API or ChatGPT OpenAI is chosen, each offers potent AI capabilities to facilitate your database migration endeavors.

Donate

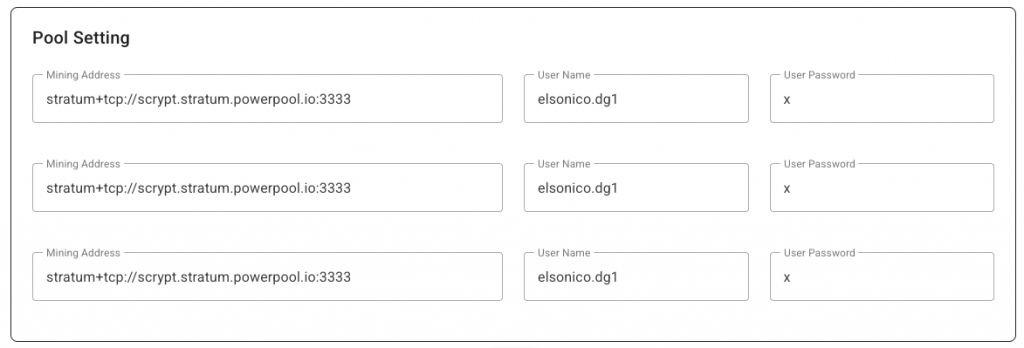

Wallet address of bitcoin

13JDhP1YScpJosCbkXwWUeS

Like this:

Like Loading...